The advent of Large Language Models (LLMs) has revolutionized various applications in the field of natural language processing. These models, however, face inherent challenges that impact their effectiveness. Due to their lack of knowledge sources, their static nature and lack of domain-specific knowledge limit their applicability in dynamic contexts.

Recently, researchers have developed a solution known as Retrieval Augmentation Generation (RAG) to address these issues. RAG combines the retrieval of specific, high-quality knowledge sources with the generative capabilities of models like ChatGPT to enhance accuracy and context relevance.

This blog post explores RAG’s significance, its components, the criteria for selecting knowledge sources, and the role of platforms like StackSpot AI in integrating these sources. The aim is to shed light on the enhanced capabilities and potential applications of RAG-enhanced LLMs in various domains.

Challenges of using LLMs

General-purpose language models can be tuned to perform everyday tasks such as sentiment analysis and named entity recognition. Typically, these tasks do not require additional contextual knowledge.

However, when additional knowledge is necessary, these Large Language Models (LLMs) exhibit two significant disadvantages:

- They are static: LLMs are “frozen in time” and do not possess updated information.

- They lack domain-specific knowledge: LLMs are trained with public data, meaning they do not know your company’s private data.

Unfortunately, these issues affect the accuracy of applications that utilize LLMs.

What is Retrieval Augmentation Generation (RAG)?

For more complex and knowledge-intensive tasks, researchers from Meta AI have introduced a method called Retrieval Augmentation Generation (RAG) to handle these types of tasks, helping to mitigate the problem of “hallucination”.

However, the efficacy of Retrieval Augmentation Generation (RAG)-based systems hinges on establishing high-quality knowledge sources.

Knowledge sources are representative documents that enhance the generative component of RAG systems. Such sources are pivotal in providing the necessary depth and breadth of information, enabling these systems to deliver enriched, contextually relevant content that meets the nuanced demands of users.

How does Retrieval Augmentation Generation (RAG) work?

In summary, to implement a Retrieval Augmentation Generation (RAG), we must break it down into two main components: the retrieval and the generative components.

- Retrieval Component: This component fetches relevant information from a specified dataset. Typically, these components use information retrieval techniques or semantic search to identify the most relevant data for a given query. They are efficient at sourcing relevant information but cannot generate new content.

- Generative Component: On the other hand, the generative components are designed to create new content based on a given prompt. Language models like ChatGPT are excellent for this type of task.

Identifying and selecting representative knowledge sources is essential for the retrieval component to function effectively. Knowledge sources consist of documents retained by the retrieval component, which later serve as the foundation for the generative component’s output.

The quality of these sources directly impacts the quality of the answers generated. Accurate, current, and comprehensive knowledge sources ensure the information retrieved is relevant and reliable, enabling the generative component to produce well-informed and precise responses.

Why is it important to have representative knowledge sources

The foundation of knowledge sources is the bedrock of any robust information retrieval and processing system. The integrity and utility of these systems pivot on the quality and representativeness of the information they draw upon.

Representative knowledge sources ensure that the data is not only pertinent and authoritative but also mirrors the multifaceted nature of the real world. They enable the delivery of insights that are accurate, comprehensive, and applicable to current scenarios.

In essence, selecting representative knowledge sources is a crucial determinant of a system’s ability to provide relevant, reliable, and nuanced responses, which is indispensable for LLM-based applications.

Criteria for selecting representative knowledge sources

Here, we’ve compiled a list of items aimed at assisting developers in building their LLM-based applications and guiding users of such systems to maximize their benefits. These curated items are designed to ensure an optimal experience in leveraging the capabilities of language models.

Relevance

A relevant knowledge source is intricately linked to the specific domain it aims to serve. Take, for instance, the task of developing software for project management. In this scenario, a knowledge source that contains the latest articles, case studies, and best practices in agile methodologies, software lifecycle management, and team productivity tools would be invaluable.

Relevance is critical to ensure that the data retrieved is precisely what is needed for the software’s context, enhancing the focus and effectiveness of the generative output in providing practical answers for developers.

Comprehensiveness

A comprehensive knowledge source offers extensive subject coverage, including various subtopics, opinions, and recent advancements.

For instance, in creating an algorithm for natural language processing (NLP), a comprehensive knowledge base would not only cover fundamental NLP techniques but also delve into advanced topics such as sentiment analysis, machine translation, and speech recognition, including the latest research methodologies.

This breadth ensures that the information retrieved is detailed and provides a holistic view of the topic.

Currency

Currency in knowledge sources is about the freshness and timeliness of the information, which is particularly crucial in fast-evolving fields such as software development. For example, when developing a cybersecurity application, knowledge sources need to include the latest vulnerabilities.

Accuracy

Accuracy is fundamental to the reliability of any knowledge source, as it guarantees that the information is precise, having undergone thorough verification against established benchmarks. In software development, for instance, accuracy is paramount when building a financial software system.

Bias-free

Finally, a bias-free knowledge source is critical for imparting objective information, devoid of personal or cultural biases.

In software development, consider the creation of a recommendation engine. By ensuring the data is not tilted by particular viewpoints or preferences, the recommendation engine can provide users with choices that are fair, balanced, and reflective of a diverse array of user experiences.

Knowledge Sources at StackSpot AI

StackSpot AI presents a user-friendly interface designed to upload pertinent knowledge sources seamlessly. This interface has an advanced query mechanism that leverages sophisticated information retrieval techniques to locate documents efficiently.

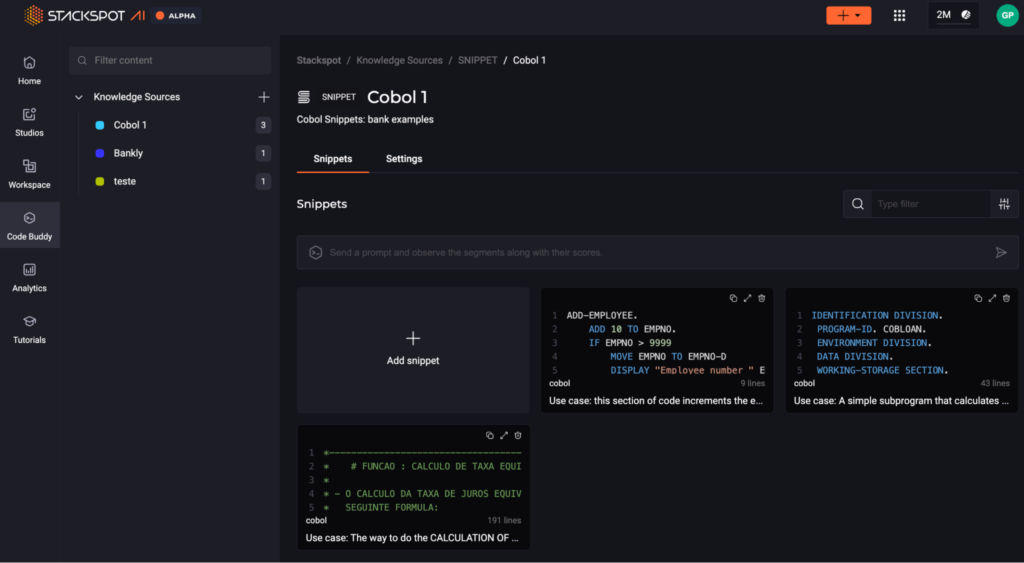

The figure below shows the portal feature to upload knowledge sources.

Once a diverse array of knowledge sources has been integrated into the portal, the StackSpot AI plugin empowers users to execute their queries through user prompts. This is achieved without the need for users to be aware of the specific knowledge sources at their disposal.

Essentially, the plugin includes a powerful search engine that intelligently matches user queries to the most relevant knowledge sources. For example, when a query such as “create a payment method” is input, the engine searches for and aligns the query with the appropriate knowledge sources. These could range from a Java class that details the procedure for implementing a payment method to an API that facilitates a connection to a payment service.

Through this intuitive and efficient process, the platform ensures that users can easily and precisely access and utilize the wealth of information stored within the system.

Concluding

The success of language model-based applications, especially those requiring a nuanced understanding of complex subjects, relies heavily on the quality of their underlying knowledge sources.

As we navigate the ever-evolving landscape of software development and data analysis, the principles of relevance, comprehensiveness, currency, accuracy, and the absence of bias form the pillars upon which effective and reliable Retrieval Augmentation Generation (RAG) systems are built.

By meticulously curating these knowledge sources, developers and users alike can harness the full potential of language models, ensuring that the output is not only informative and relevant but also a reflection of the latest, most accurate data available.

Skip to content

Skip to content