Initially introduced in 2017, transformers have become central in the evolution of Large Language Models (LLMs) like OpenAI’s GPT-3.

These models have fundamentally changed the approach to natural language processing and deep learning, allowing models to process and generate text with a keen understanding of context.

In this blog post, we will summarize the existing transformer literature, showcasing how they can be helpful in creating coding AI assistant tools – such as StackSpot AI.

Transformers and the rise of Large Language Models (LLMs)

The key feature of Transformers is their self-adaptive attention mechanism. This mechanism allows the model to dynamically focus on the most relevant parts of text sequences, essential for recognizing long-range relationships in text and understanding complex language nuances.

Transformers have significantly influenced artificial intelligence (AI). These models, trained on large datasets of text, can answer questions, translate languages, and produce coherent, context-relevant content. They are proficient in a range of natural language processing tasks and have significantly advanced technologies like virtual assistants, task automation, machine-assisted writing, and software development.

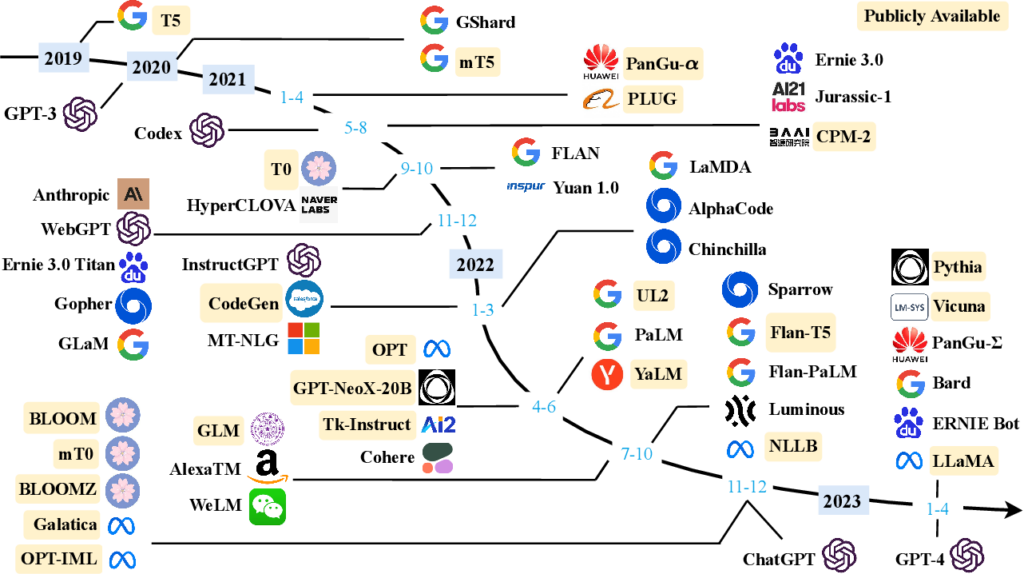

The figure below – taken from this research paper – illustrates the evolution of Large Language Models (LLMs). It shows the development of models like T5, GPT-3, Codex, and GPT-4, indicating ongoing innovation and refinement in natural language processing.

This timeline highlights the technological progress and the growing relevance of LLM research in AI and language understanding.

At their foundation, LLMs utilize deep neural network architectures. These networks consist of multiple layers of artificial neurons trained on broad text collections. Through this training, they learn pattern recognition, grammatical structure comprehension, and semantic relationship understanding. Their primary function is to predict the next word in a text sequence. Continually repeating this task improves the models’ capacity for context understanding and coherent text generation.

Large Language Models-based tools

With their numerous adjustable parameters, LLMs, such as GPT-3 and GitHub Copilot, have accumulated a wide range of knowledge on various topics. This enables them to understand intricate queries, create new content, and even replicate the writing styles of human authors, enhancing their ability to produce text for diverse applications.

In software development, LLMs are being used more frequently to assist programmers. They can help with a spectrum of tasks, from simple to complex.

The accuracy of the output from these AI code assistants is crucial. Errors in the generated code can lead to security vulnerabilities, malfunctions, and other problems affecting the quality and reliability of the software. Therefore, it is vital to assess and manage the output of these assistants to maintain the integrity of the final code.

The application of AI in code generation brings up several key considerations. These include ethical issues, liability questions, privacy concerns, and determining responsibility for the generated code. These are essential factors for software engineers to address and profoundly impact how intelligent assistants are received and used in software development.

User studies with LLM-based tools

With the increasing use of AI code assistants, understanding the benefits and challenges they present to software developers has gained prominence. Studies have primarily concentrated on specific code generation tools and GitHub Copilot.

Jiang et al. explored GenLine, an LLM-based tool for translating natural language requests into code. Involving 14 participants with varying front-end coding experience, the study tasked them with building web-based applications using GenLine. The findings showed that participants needed to adjust how they interacted with the tool, learning particular wordings and phrasings to get the desired output. When the tool’s responses were unexpected, they often changed their requests, adjusted the information provided, or tweaked GenLine’s settings.

Barke and team researched how 20 people, including professional developers and students, used Copilot in a realistic programming scenario. They found that the use of programming assistants varied: in acceleration mode, programmers used Copilot to hasten known tasks, while in exploration mode, they used it to navigate through uncertainties.

Bird and colleagues used three data sources to investigate how software developers used GitHub Copilot in January 2022. Information from public forums shed light on how Copilot was used and its associated challenges, such as licensing issues and workflow disruptions. Reports indicated that users had less understanding of the code’s workings. Additionally, an experiment involving five expert developers who created a simple game and data on Copilot’s effect on productivity were included in their research.

These studies generally examined developers’ experiences in controlled environments, focusing on their interactions during straightforward tasks. Future research is anticipated to delve into these aspects in more realistic settings. This is where StackSpot AI shines.

Meet StackSpot AI

Unlike general coding assistants like GitHub Copilot or Amazon Code Whisper, StackSpot AI is a coding AI assistant tailored to the specific needs of individual developers and projects.

StackSpot AI utilizes a Retrieval-Augmented Generation (RAG) mechanism, which includes a retrieval component and a generation component.

The retrieval part is designed to find relevant documents from a chosen dataset, using information retrieval techniques to locate the most pertinent document for a user query. While effective in sourcing information, this part cannot generate new content. Conversely, the generation component leverages OpenAI’s GPT-4. We wrote another blog post describing how StackSpot AI was architectured.

Therefore, when a developer creates a new algorithm but encounters implementation challenges, StackSpot AI can produce code snippets that align with the developer’s particular situation, informed by the documents identified by the retrieval part.

StackSpot AI combines advanced retrieval with generative capabilities to provide developers with precise and context-sensitive support.

Concluding

Looking ahead, the continued advancement and refinement of LLMs and their applications in various domains suggest a future where AI plays an increasingly integral role in many aspects of daily life.

In conclusion, the advent of Transformer-based models and LLMs represents a significant milestone in AI and natural language processing. Their ability to understand and generate contextually relevant text has opened new horizons in various applications, from virtual assistants to software development.

With StackSpot AI, we are eager to join this new flow of contextualized coding assistants, helping developers develop better and faster code.

Skip to content

Skip to content